By David A. M. Goldberg with Claudia Lo and Margeigh Novotny

There are over 6 million articles on the English language version of Wikipedia, and 217 million more across the 731 other wikiprojects worldwide. These are mind-boggling numbers when considering the scale of human effort it takes to improve and police this much content, let alone monitor their online activities to detect and mitigate harassment. However, the Wikimedia Foundation and the Free Knowledge Movement remain vigilant, and dedicated to cultivating the most democratic practices and confronting some of the most antagonistic elements of Internet culture that Hermosillo[1] recognized and theorized about so long ago: the “black hole”[1] of toxicity, hypernormalization, deepfakes and mass sock puppetry[2] that define online culture today.

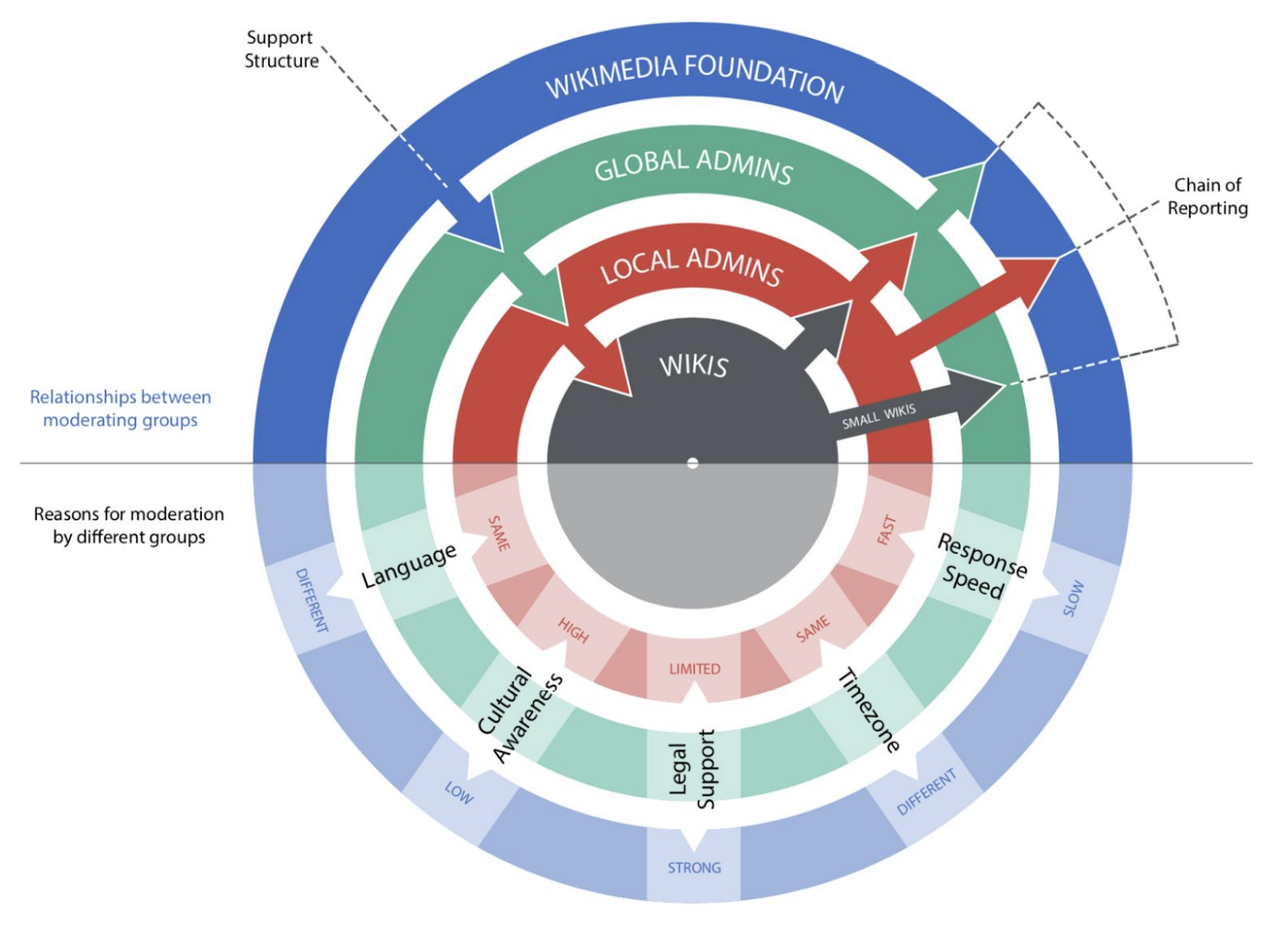

How then does a free and open volunteer-driven project like Wikipedia manage disinformation? The truth is that there is considerable effort to mitigate user harassment and article vandalism (editing in bad faith or with malicious intent) on Wikipedia. I spoke to Claudia Lo, design researcher on the Wikimedia Foundation’s Anti-Harassment Tools team, and learned that the Foundation’s commitment to establishing ever-widening circles of inclusive participation makes one-size-fits-all solutions hard to come by (whether they’re policy-driven or technical). What remains consistent however, is the need for active human participation in moderation processes that involve complex technical and ethical challenges.

Lo’s background includes practical “in the trenches” experience as a volunteer moderator on Reddit and Discord, and academic media studies research that focused on the tools and technologies that Twitch moderators used during video game tournaments. Unlike these platforms where discourse is transient, and content was relatively unregulated, Wikipedia’s editorial standards call for objective and citation-driven contributions. Wikipedia deals in truth and factuality over opinion and speculation, so when antagonism and disruption do emerge, the stakes are higher. Though Wikipedia does not consider itself a reliable source, it is trustworthy enough to attract those who would exploit privileges that the platform extends to all users in order to sow dissent, attack others, disrupt collaboration, push agendas, execute vendettas, and cultivate uncertainty. Such “bad actors” may see nothing of value in the Foundation’s mission to make all of the world’s knowledge available to all of the world’s people, but they know that a considerable portion of cyberspace’s netizens do, and that third-party search and knowledge base systems are making increasing use of its product.

Wikipedia content is always in-progress or in a state of being edited, so how does one distinguish active trolling, fierce but otherwise healthy partisanship, genuine disputes, and naivete or inexperience? Lo has seen it all: poor writing and incompetently structured pages, frivolous vanity articles, edit wars over celebrity birthdays, and people seeking revenge on their high school alma maters. There are also behind-the-scenes battles and truly ugly behavior on the talk pages behind articles, as well as intense (and possibly state-sponsored) conflicts surrounding polarizing political figures and historical events. Fortunately, the emotional or epistemological aspects of vandalism and harassment activities can be separated from their technical ones, and such behavior leaves patterns in server logs and edit histories.

Just as a denial of service attack on a webserver can be automatically recognized based on the source and frequency of requests, a Wikipedia article that sees a high number of edits in a short period of time, or coming from the same IP address(es), can be flagged as potential vandalism. Because the ability to contribute and edit anonymously keeps barriers to participation low, reducing vandalism by banning accounts or blocking individual or ranges of Internet Protocol (IP) addresses contradicts the core aspects of the Wikimedia philosophy. As Hermosillo warned: “cyberspace is an increasingly efficient tool of surveillance with which people have a voluntary relationship,” so restricting contribution to registered users challenges the WMF commitment to privacy, as does the need to retain usage logs in order to perform systematic analysis of accounts associated with abusive behavior.

For their part, IP blocking tactics are not only surmountable by dedicated bad actors, but they run afoul of broader protocol-related issues such as IP addresses that are assigned dynamically or in blocks. The risk of blanket restrictions preventing access for users that are not identified as bad actors is real. And yet tools that facilitate blocks, track spurious accounts, and initiate bans are the primary means of thwarting abuse. In typical Wikimedia fashion, the chosen solution does not involve restricting access to these tools—thereby creating a kind of police force—but making them more accessible to the broader community.

Working with moderation technology requires the ability to parse and cross-reference lots of information, to recognize patterns, and pay attention to constantly-shifting details. Such forensic skills are not exclusive to any one group of people, but the complexity of the tools themselves restricts their use to those who are already in the technical and/or cultural position to use them. As a designer, Claudia Lo wants to make these tools easier to use—right now they can involve a headache-inducing combination of spreadsheets and dozens of browser tabs. Put another way, Lo believes that one should not have to be an alpha nerd if one wants to contribute to the community moderation process.

I was surprised to hear Lo describe this as a “utilitarian” effort, but as I listened to her describe the straightforward strategies that Twitch moderators used to do their jobs I came to understand that this remains a deeply human challenge. AI may eventually be able to execute some of the Twitch moderators’ heuristics, such as using lists of inappropriate words or phrases in various languages in conjunction with real-time parsing of text streams’ content and points of origin. But Wikipedia’s problems are not reducible to flagging inappropriate language and booting trolls, especially in a political era that has considerable trouble grappling with issues of censorship, and what “free” speech and expression means when one’s identity online is effectively disposable.

I got the impression from Lo that there were no magic bullets to be crafted, but this is far from a concession or a retreat. The Anti-Harassment Team’s approach is consistent with the Foundation’s tendency to work incrementally to gradually improve the system over time, and in cooperation with community volunteers. But in this particular case, applying human-centered design to the problem of moderation tools’ overwhelming complexity will give more people the opportunity to address vandalism and harassment in their respective communities rather than relying exclusively on a highly technical and top down moderation strategy.